History of Computer: “From Ancient to Modern Innovations”

Updated: 16 Nov 2024

87

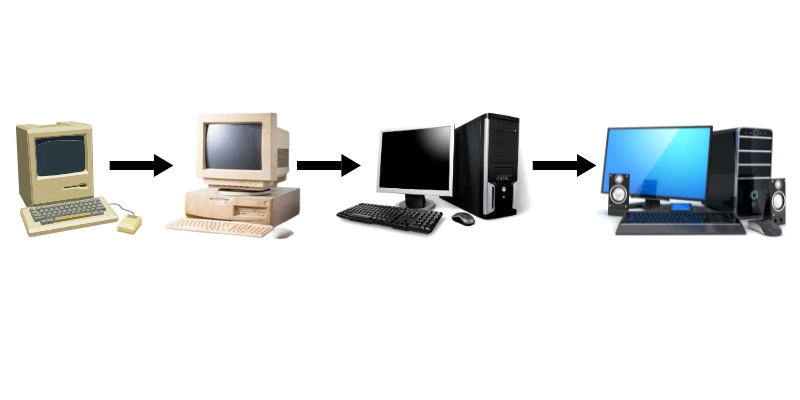

The history of computers is a fascinating journey that spans centuries, with contributions from many brilliant minds and numerous technological advancements. Here’s an overview of the major milestones in the development of computers:

1. Pre-Computer Era: Early Calculating Devices

Before the invention of modern computers, various devices were used for calculation and problem-solving.

- Abacus (c. 2300 BCE): One of the earliest tools for calculation, originating in ancient Mesopotamia, the abacus was used for basic arithmetic operations.

- Antikythera Mechanism (c. 100 BCE): A sophisticated analog device found in an ancient Greek shipwreck, believed to be used for astronomical calculations and predictions.

- Napier’s Bones (1617): John Napier, a Scottish mathematician, invented a set of rods (called “Napier’s Bones”) for simplifying multiplication and division.

2. The Concept of Automation (17th – 19th Century)

In the 17th century, the idea of automating calculation took shape, laying the groundwork for future developments.

- Blaise Pascal (1642): Pascal invented the “Pascaline,” an early mechanical calculator that could add and subtract numbers.

- Gottfried Wilhelm Leibniz (1673): Leibniz improved upon Pascal’s design with his “Step Reckoner,” which could perform multiplication and division.

- Charles Babbage (1830s): Often referred to as the “father of the computer,” Babbage conceptualized the Analytical Engine, a mechanical device capable of performing any arithmetic operation. Though never fully built, the Analytical Engine included key components of modern computers, such as a control unit, memory, and input/output.

3. The Birth of Modern Computers (1930s – 1940s)

The early 20th century saw the advent of electronic computers, which were faster and more powerful than mechanical devices.

- Alan Turing (1936): Turing developed the concept of a theoretical machine, now known as the Turing Machine, which could simulate any algorithm. This laid the foundation for the modern theory of computation and programming.

- Konrad Zuse (1936-1941): A German engineer, Zuse created the Z3, the first programmable digital computer, in 1941. The Z3 was used for calculating aircraft designs.

- John Atanasoff and Clifford Berry (1937-1942): Atanasoff and Berry developed the Atanasoff-Berry Computer (ABC), which is considered one of the earliest electronic digital computers, designed to solve systems of simultaneous linear equations.

- Colossus (1943): During World War II, British engineer Tommy Flowers built the Colossus, an electronic computer used to break German encryption codes.

- ENIAC (1945): The Electronic Numerical Integrator and Computer (ENIAC) was the first fully electronic general-purpose computer, developed by John Presper Eckert and John W. Mauchly. ENIAC was massive and required a team to operate, but it could perform a variety of tasks much faster than earlier mechanical computers.

4. The Mainframe Era (1950s – 1960s)

After World War II, electronic computers began to evolve rapidly.

- UNIVAC (1951): The Universal Automatic Computer (UNIVAC), developed by Eckert and Mauchly, was the first commercially produced computer. It was used by the U.S. Census Bureau and other industries.

- IBM 700 Series (1950s): IBM introduced its line of mainframe computers, which became the standard for business and government computing for many years.

- Transistors (1947): The invention of the transistor by John Bardeen, Walter Brattain, and William Shockley revolutionized computing by replacing bulky vacuum tubes with smaller, more reliable components. This made computers faster, smaller, and more affordable.

5. The Microprocessor Revolution (1970s – 1980s)

The development of microprocessors marked the beginning of personal computing.

- Intel 4004 (1971): Intel introduced the 4004, the world’s first microprocessor, which contained all the essential components of a computer’s central processing unit (CPU) on a single chip.

- Altair 8800 (1975): The Altair 8800, developed by MITS, was one of the first personal computers and came with a build-your-own kit. It gained popularity among hobbyists and led to the founding of Microsoft.

- Apple I and II (1976-1977): Steve Jobs, Steve Wozniak, and Ronald Wayne founded Apple and introduced the Apple I and Apple II, which were among the first commercially successful personal computers.

- IBM PC (1981): IBM launched the IBM Personal Computer (PC), which standardized the personal computer industry and helped pave the way for the widespread use of computers in homes and businesses.

- Macintosh (1984): Apple introduced the Macintosh, which featured a graphical user interface (GUI), a departure from the command-line interfaces of most computers at the time.

6. The Rise of the Internet and Networking (1990s – Early 2000s)

The 1990s saw the widespread adoption of the internet and networking technologies, which transformed the way computers were used.

- World Wide Web (1990): Tim Berners-Lee, a British computer scientist, invented the World Wide Web (WWW) to share information over the internet using hyperlinks and browsers. This revolutionized access to information.

- Windows 95 (1995): Microsoft introduced Windows 95, which was the first major operating system to integrate the internet with personal computing.

- The Dot-com Boom (late 1990s): The internet led to the rise of online businesses and services, and companies like Amazon, eBay, and Google were founded, transforming the digital economy.

7. The Era of Mobile Computing and Artificial Intelligence (2000s – Present)

In the 21st century, computers became more powerful, portable, and integrated into everyday life.

- Smartphones and Tablets (2000s): With the introduction of the iPhone in 2007 and subsequent devices, smartphones became the dominant computing platform. Tablets like the iPad also gained popularity, furthering the trend of portable computing.

- Cloud Computing (2000s): Services like Amazon Web Services (AWS) and Google Cloud enabled individuals and businesses to store data and run applications over the internet, rather than relying on local servers.

- Artificial Intelligence (AI) and Machine Learning (2010s – Present): Computers now perform advanced tasks such as image recognition, language processing, and autonomous driving. AI and machine learning are transforming industries from healthcare to finance.

- Quantum Computing (Emerging): While still in the early stages, quantum computing promises to revolutionize computing by leveraging the principles of quantum mechanics to solve problems that are currently intractable for classical computers.

8. The Future of Computing

The future of computers is likely to be characterized by even more powerful hardware, faster networks, and new forms of human-computer interaction. Some of the exciting developments on the horizon include:

- Quantum Computing: As mentioned, quantum computers are expected to solve complex problems in fields such as cryptography, materials science, and AI, far beyond the capabilities of traditional computers.

- Neuromorphic Computing: This field aims to design computers that mimic the structure and function of the human brain, offering possibilities for more efficient and intelligent machines.

- AI and Automation: With advances in AI, computers will continue to automate complex tasks, from driving vehicles to diagnosing diseases.

- Metaverse and Virtual Reality (VR): Virtual and augmented reality technologies are becoming more immersive, creating new possibilities for entertainment, education, and work.

What was the first computer in history?

The first computer in history is widely considered to be Charles Babbage’s Analytical Engine (1837), though it was never completed. The first operational electronic computer was the Z3 (1941), built by Konrad Zuse.

Who is the father of computers?

Charles Babbage is known as the “father of computers” for his design of the Analytical Engine, the first mechanical concept of a general-purpose computer.

Who is the brain of the computer?

The CPU (Central Processing Unit) is considered the “brain” of the computer, as it performs calculations and executes instructions.

What is computer full form?

The full form of computer is often given as Common Oriented Machine Purposely Used for Technical and Educational Research.

Conclusion

The history of computers is a testament to human ingenuity, from the earliest counting devices to the powerful, interconnected systems of today. Each breakthrough in the field has built upon the previous one, and computers continue to evolve in ways that were once unimaginable, shaping virtually every aspect of modern life.

Please Write Your Comments